Academics warn new science papers are being generated with AI chatbots

![Chatbots are prone to hallucination, or a phenomenon where they present illogical or nonsensical answers as facts [File] Chatbots are prone to hallucination, or a phenomenon where they present illogical or nonsensical answers as facts [File]](https://www.thehindu.com/theme/images/th-online/1x1_spacer.png)

Chatbots are prone to hallucination, or a phenomenon where they present illogical or nonsensical answers as facts [File]

| Photo Credit: REUTERS

Social media users and academics are warning that more scientific papers show signs of being partially generated with the help of AI chatbots.

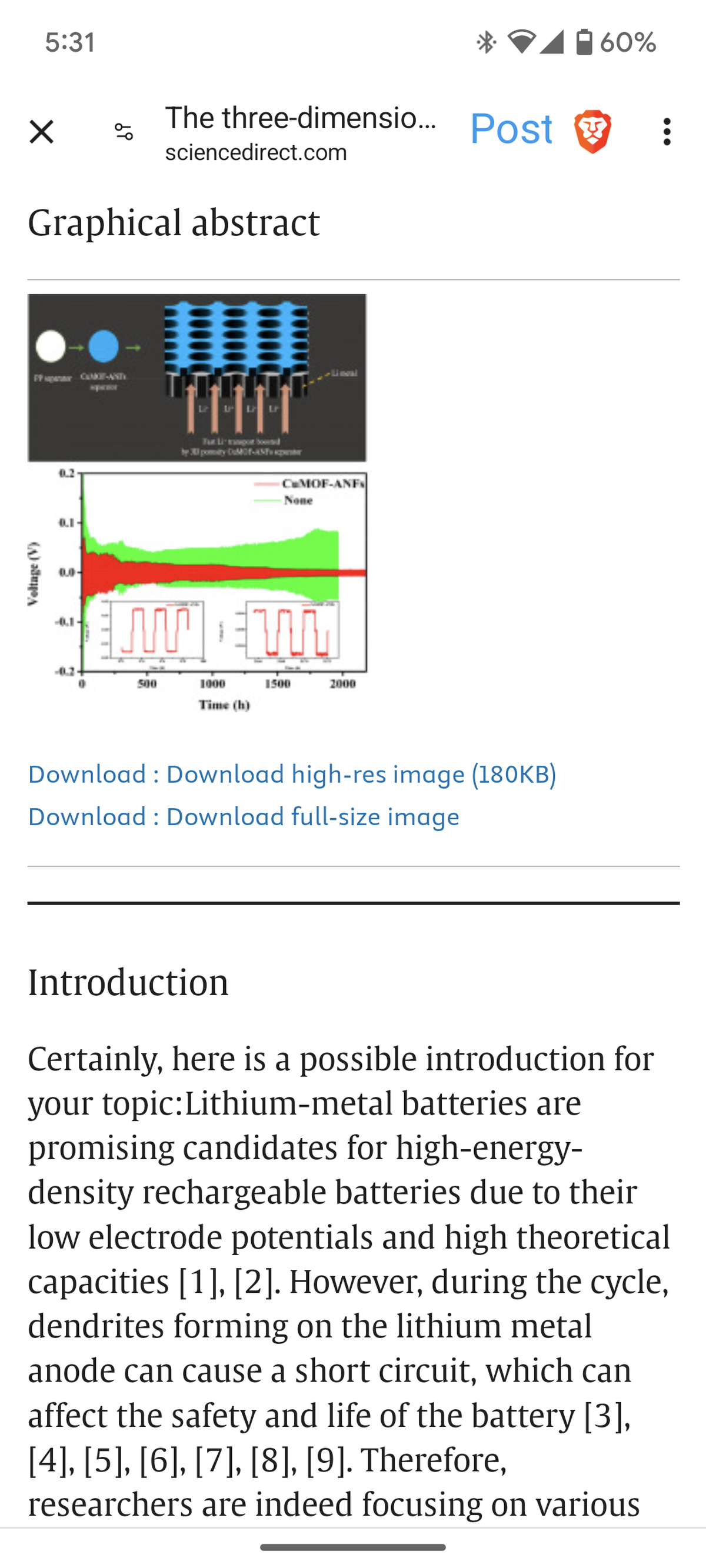

For example, a paper published on ScienceDirect, titled ‘The three-dimensional porous mesh structure of Cu-based metal-organic-framework – aramid cellulose separator enhances the electrochemical performance of lithium metal anode batteries,’ published in March 2024 started its introduction with the phrase: “Certainly, here is a possible introduction for your topic:” before covering the subject at hand.

The phrase is commonly used by chatbots such as OpenAI’s ChatGPT when they provide responses to user queries.

(For top technology news of the day, subscribe to our tech newsletter Today’s Cache)

As of Friday, the paper was still accessible through ScienceDirect and the introduction had not been edited or updated. ScienceDirect allows users to digitally access the works of the Dutch academic publisher Elsevier.

The introduction of the paper shows a stock phrase commonly used by chatbots

| Photo Credit:

ScienceDirect

Elsevier is known for its stringent stance against digital piracy and has pursued legal cases against shadow libraries providing free downloads of its publications.

Meanwhile, the stock phrase “as of my last knowledge update” – most commonly used by ChatGPT – was identified in several academic journals when looked up via Google Scholar, reported tech outlet 404 Media on March 18.

Most of these phrases were found in papers that investigated ChatGPT and its responses, but others were present in research papers that covered non-AI subjects.

“As of my last knowledge update in 2021, approximately 65% of India’s population is under the age of 35,” was one sentence in a paper titled ‘Youth at Risk: Understanding Vulnerabilities and Promoting Resilience’ by Dr. Priyanka Beniwal and Dr. C.K. Singh (2023).

The publisher, Weser Books, appeared to be a self-publishing service.

Novelists and journalists have accused the tech companies working on chatbots of misusing their copyrighted works without consent for the sake of AI training. Some companies hit with lawsuits include OpenAI, Microsoft, Google, and Meta.

Chatbots are also prone to hallucination, or a phenomenon where they present illogical or nonsensical answers as facts. This raises the risk of incorrect data making it into costly, peer-reviewed scientific publications used by scholars worldwide.